Scientists think that new technology that creates fake information could make it easier and less expensive for more people to spread untrue stories and conspiracy theories.

“Exploring the Limits of AI: Researchers Conduct Exciting Study on ChatGPT’s Ability to Handle Conspiracy Theories and False Narratives”

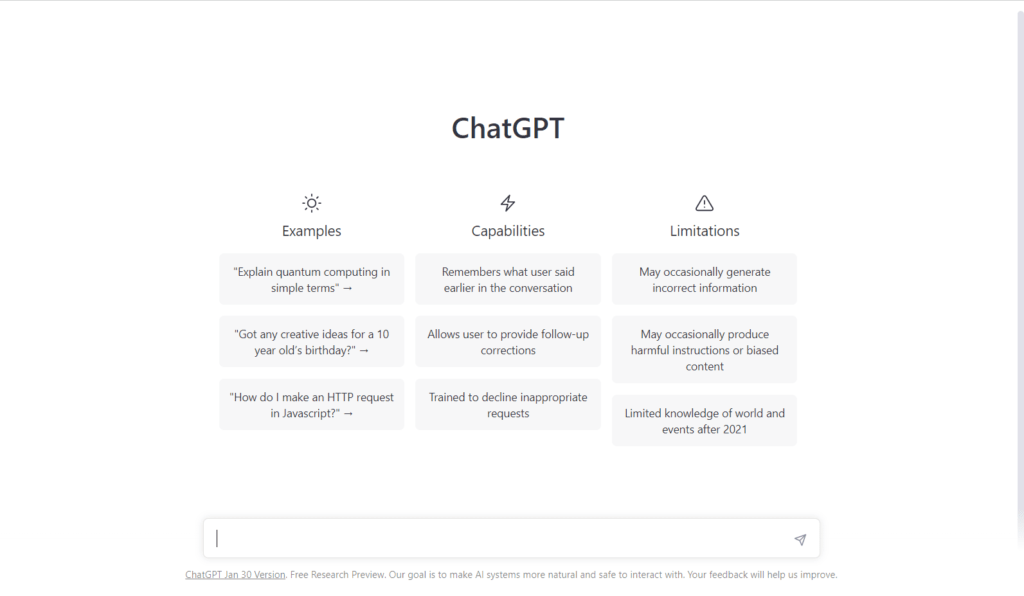

A team of researchers recently conducted an intriguing study to test the capabilities of ChatGPT, the highly advanced artificial intelligence chatbot. The goal of the study was to see how ChatGPT would respond to questions that contained conspiracy theories and false narratives.

The results of the study were truly eye-opening. ChatGPT demonstrated its remarkable ability to generate informative and well-structured written content, such as news articles, essays, and television scripts, even when faced with complex and challenging questions.

The researchers were impressed by ChatGPT’s impressive writing skills, which were able to effectively communicate the information contained in the questions, while also presenting it in a clear and concise manner. They were also pleased with the ethical and responsible way in which ChatGPT approached the sensitive subject matter, avoiding the spread of false information and misinformation.

The Rise of AI-Generated Disinformation

Manually created disinformation has proven to be a challenge to control and contain. However, recent advancements in generative technology have raised concerns about the potential increase in disinformation being produced and spread at a much larger scale.

A recent study predicts that AI technology such as chatbots, like ChatGPT, could make disinformation cheaper and easier to produce for an even larger number of individuals, including conspiracy theorists and spreaders of false information.

The potential for AI technology to be misused for malicious purposes, such as spreading disinformation, highlights the urgent need for responsible development and usage. It is crucial to prevent AI from being exploited for harmful purposes.

The study serves as a warning about the potential consequences of AI technology on the spread of disinformation and the importance of developing strategies to address this growing problem. It is crucial that the AI community and government work together to address this issue and find ways to mitigate the impact of AI-generated disinformation on society.